Listen to this really fun AI-generated audio summary of this article.

Remember when you were first learning to live with type 1 diabetes? It was confusing, complicated and so overwhelming, you never thought you’d ever get it right. What diet works best? How should you dose for exercise? Are carbs “bad?” Should I use an insulin pump? All at once, you felt like Dorothy from The Wizard of Oz, where a tornado has taken away everything, and you found yourself in a strange and unusual world.

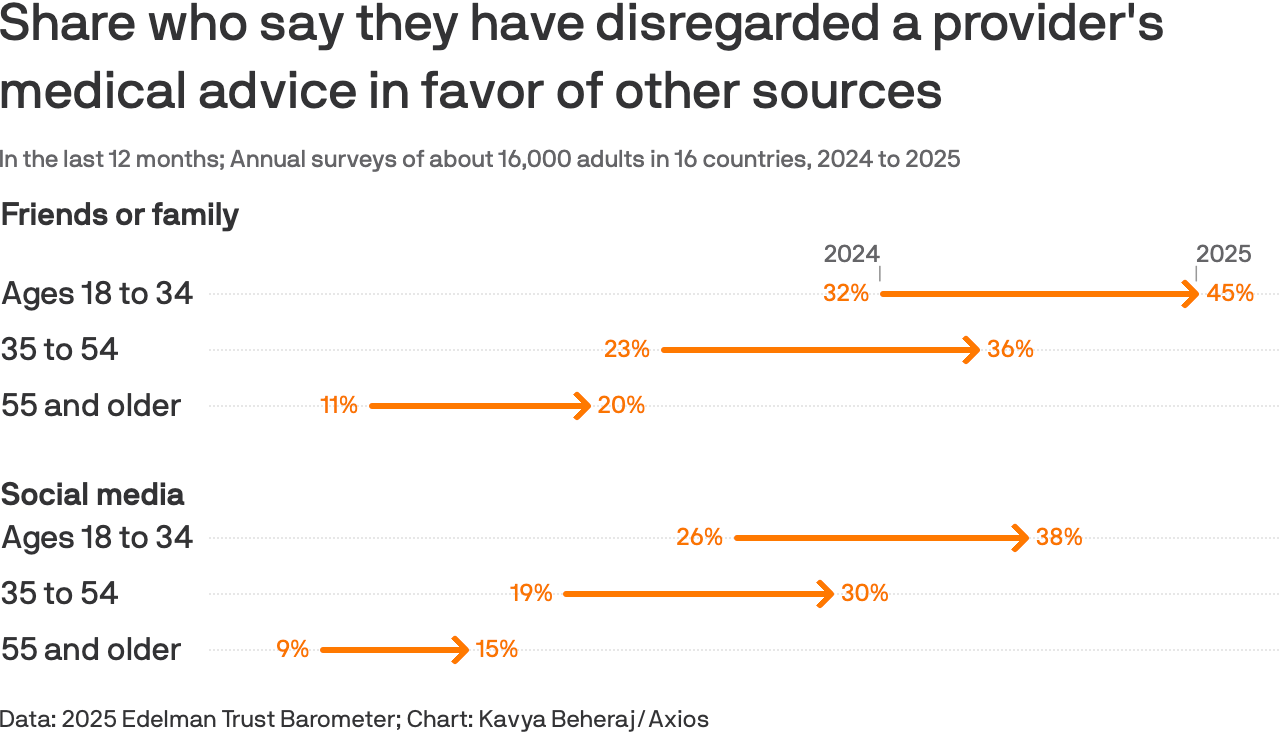

You’d think that the best person to talk to would be your doctor. And yet, surveys published in the article, “Gen Z increasingly listens to peers over doctors for health advice” finds that people are increasingly looking to social media for medical advice over their own doctor’s advice.

To be sure, this is across all people, not just diabetics, but that doesn’t mean it’s any different for us. Statistically, fewer diabetics even see an endocrinologist for their care, instead using primary care physicians (PCPs) who are not trained in this highly complex and individualized disease. This is largely because there aren’t enough endos in the first place.

But readers of this newsletter—who are statistically more likely to have had T1D for many years already—might also have another reason: Sometimes, doctors are not really that well tuned to the patient’s unique and individualized needs. Social media is rife with examples where their doctors want them to use or not use a pump, to exercise or not (yeah! really!) to aim for a suspiciously high A1c target for some reason, and so on. I don’t know anyone, myself included, that didn’t feel that my doc was just a tad off about some topic at some point. (Ok,, I’m being generous.)

All the statistics in that article are fascinating, but the last one is the punchline: “Nearly 60% of young adults say they've made at least one health decision they regret based on inaccurate or misleading information.”

To some degree, it’s understandable. Who, then, is the oracle of wisdom—the Wizard of T1D? Let’s go through the candidates:

Endocrinologists tell you what to do, but it doesn’t always work.

Technology companies sell you devices that should do the work, but they don’t work really well.

Scientists know how things actually work, but only under highly controlled conditions, or on mice.

Epidemiologists study what works in the real world, but not all the time and not for everyone.

Social networks where T1Ds hang out are convinced they know what works and how, and if it doesn’t work for you, you’re doing it wrong.

Podcasters cheerfully try to help you feel better about why nothing works.

Everyone else thinks you have type 2 diabetes and tells you to just eat cinnamon.

Sigh–you’re lost. We’ve all been there. But rest assured, there are ways to figure all this out. In my article, “You’ve Got Type 1 Diabetes! Let the Fun and Agony Begin!” I outline the three stages of learning about T1D. The first stage is listening to your health care team because, frankly, you have no choice. You don’t know anything, and you need to get the basics.

As time passes, you realize not everything works, and the complexity and mystery of the disease is such that you can’t learn that much from your doctor in 15-minute appointments every six months. So, you then decide to learn from other sources. As you cycle through the candidates above, you eventually learn that medical journals are your best bet. This is where you enter stage two.

Here’s the hitch: Medical papers can be hard to understand without a graduate degree in the life sciences. Worse, a lot of studies seem contradictory, misleading, or even blatantly wrong. This could be due to just sloppiness, but more often than not, other confounding problems muddy things up, including (but limited to) author affiliations with companies or institutions that aim for particular outcomes. Even those in the medical field have a problem navigating this, and complain that it’s getting worse as online publications make it easier to publish junk science.

Fascinating coverage of this problem is discussed in a two-part podcast from Freakonomics, “Why Is There So Much Fraud in Academia?”, which cited over 10,000 research papers that were retracted last year for reasons that get pretty odd and unexpected.

But that doesn’t mean there isn’t good, credible clinical data out there, and in a format you can understand. But it takes a keen eye to know what to look for—and to notice when something that should be there but isn’t.

I’ll illustrate with a trial called, “Parachute use to prevent death and major trauma when jumping from aircraft: randomized controlled trial.” The aim of the study was “To determine if using a parachute prevents death or major traumatic injury when jumping from an aircraft.” Yes, you read that right. Believe it or not, 23 people actually agreed to jump from an airplane using either a parachute or an empty backpack–and they didn’t know which they would get! If that’s not shocking enough, the conclusion of the study found that not a single person was injured.

No, this was not a fraudulent study–it was a fully legit trial, conducted by a large group of well-respected researchers and scientists from top medical schools in the country, and was published in the highly prestigious British Journal of Medicine.

You’re probably wondering how such a study and its conclusions could possibly be valid, peer-reviewed and published. Spoiler alert: The aircraft was actually on the ground so the people who jumped didn’t actually face any risk. The authors provided this photo:

The authors’ goal was to demonstrate in an amusing way the techniques that some authors use to conduct trials with suspect aims and conclusions, and still manage to publish their results in medical literature. (This illustrates how the medical community is aware of the problem.)

To avoid being misinformed, one needs to learn how to spot details that matter, especially when data isn’t there that should be. Dr. Peter Attia, the prolific author, physician and research analyst has a fully detailed and easy-to-follow podcast on the topic called, “Good vs. bad science: how to read and understand scientific studies.” Here, he explains how medical studies are done, how to see quality, and how to see flaws. This isn’t to suggest it’s easy–but this primer is a good overview.

Let’s try this in a practical use case: Let’s say you’re looking to see if you should use a closed-loop insulin pump. In an ideal world, you shouldn’t have to think about it. If your endocrinologist recommends a pump, one naturally assumes that they’ve read the medical literature and validated the findings. Right?

Well, it’s not that simple.

Consider a clinical trial published in the prestigious journal The Lancet titled, “Advanced hybrid closed loop therapy versus conventional treatment in adults with type 1 diabetes (ADAPT): a randomised controlled study.” This study is an excellent case for reviewing the techniques described by the parachute study and Dr. Attia’s tutorial.

To begin, the trial showed that those who used a Medtronic closed-loop system had their A1c levels drop from 9·00% to 7·32%, whereas those who used multiple daily injections (MDI) went from 9·07% to 8·91%. At first blush, it would seem the closed-loop system is a clear benefit. There was nothing technically wrong with the way the study was run to be sure–it was properly randomized, and met all other basic criteria for a well-conducted trial. But, like the parachute trial, which also met the criteria, the authors stacked the conditions in their favor, and didn’t reveal important details that clouds real conclusions.

The first item to notice is that all the participants in the study started with an A1c >9%, which is a clear indicator that these patients are not well-practiced in self-management (either because they don’t know, or can’t). No question, this is a valid cohort, as that certainly applies to many T1Ds. But what’s equally important to understand is that, to be trained on using a closed-loop insulin pump, you need to be taught those very techniques. (Otherwise, you don’t know how to use the system.) That education itself has already been demonstrated to be highly effective at helping T1Ds improve their glycemic control, but in this trial, only the pump users were given this training. Had all the participants been given the same education, it’s more likely the MDI group would have also seen improvement.

Similarly, if the trial included patients with lower A1c values, say, in the 7% range (who would likely also be educated), then it would have been interesting to see if and how the two groups compared.

But the study didn’t include those people. It’s not to suggest anything other than it’s a hole in the data that raises questions on the value of this trial as it pertains to the wider population. There’s definitely a population that benefitted, but the confounding factors—especially that of training and compliance with the protocol—is easy to miss, and may make these conclusions inapplicable to real-world scenarios.

Another problem with the Medtronic study was that it was “low-powered,” which means there weren’t that many participants enrolled. Given the wide metabolic variability among T1Ds, a lower-powered trial cannot possibly produce results that can be generalized to the wider T1D community. Similarly, the study didn’t last long enough, a problem that is systemic among most trials involving T1Ds. It’s one thing for any intervention to show results over a few weeks or months, and another thing entirely to demonstrate long-term benefit. It’s a well-studied phenomenon where study participants are more likely to be in compliance with their assigned protocols during the study, but fall out of compliance after the study ends. This is associated with the phenomenon called the The Hawthorne Effect.

Lastly, the MDI users in the trial were given “intermittently scanned continuous glucose monitoring” devices, not CGMs that constantly update their phones or receivers. That is, the participants were given sensors that had to be manually scanned in order to see glucose levels, which means that users were not able to receive alerts when their levels were too high, too low, or moving too quickly. Alerts are essential for a T1D to know when to take action when glucose levels require it. Obviously, the Medtronic system used the more modern CGM, which did provide alerts, giving those users a huge advantage over the MDI users. It’s like the Medtronic users jumped from the airplane on the ground, while the MDI users were in midair. This is not a fair comparison.

Again, this does not mean that the study is flawed, unscientific, or even invalid. But by not revealing critical information like this, a reader would draw the incorrect conclusion that the automated pump generally worked better than MDI writ large. The authors of the trial could have made readers aware of these details, but not only did they not, they added a very benign looking two-word phrase in the title of the article: Advanced hybrid closed loop therapy versus “conventional treatment…”

The term, “conventional treatment” is used by researchers to refer to individuals who are not receiving any intervention at all. In other words, these people are brought into the study for the very purpose of comparing their outcomes with the people who receive the intervention.

While this is the right thing to do, it still requires choosing a representative sample of individuals from the population. In this case, the current recommendations for T1D treatment includes learning about counting carbs and dosing properly for it, getting exercise, and, of course, using a CGM with alarms. Some percentage of T1Ds actually follow those recommendations, but in this study, the A1c’s for the “conventional treatment” group were >9%, which is not representative of the T1D population. As stated earlier, this group is either unable or unwilling to take care of themselves, thereby making it more likely that the group using the intervention—the closed-loop system—are going to outperform the control group. This is not a fair comparison, at least, not by the phrasing of the study.

If, instead, the study said that its aim was to determine if a group that was unwilling or unable to manage their T1D—a sizeable group, for sure—might do better with a closed-loop pump, then yes, this study might validate that conclusion.

So, why would the authors not clarify these details or even conduct the study so that the control group was treated otherwise equally to the study group? Well, because Medtronic funded this study and it’s their business to find use cases that tell a compelling story.

Is this unethical? Many think so, others not so much. It’s a muddy picture, even within the medical community. Companies genuinely believe their technology can provide better outcomes for their users, whether it’s drugs, self-driving cars, AI, chatbots, image-generators, social media, smartphones—all of these can have some serious bad effects, especially in early development. But the understanding is that it's part of the product cycle. We wouldn’t have anything were it not for the belief that the benefits will one day will outweigh the harms. And sometimes they’re right.

And insulin pumps are no different. There's no question that the premise of a pump seems valid. Early generations seemed like they'd do well, and each finds more features, reduced size, improved durability, and so on. It may be that they one day perform better too. It’s not my place to imply bad motivations by tech companies—I’m only trying to help readers better understand the data presented to them, and the trial conducted by device manufacturers themselves are almost all tilted in their direction using similar techniques.

This does not negate the fact that many people really do swear by their improved performance with automated systems that they weren’t able to achieve on their own. But again, closer scrutiny of these users shows that it was the pump itself that was the psychological driver to be more attentive and compliant with recommended self-management guidelines. This is a phenomenon known as the Christmas Tree Effect, where people are more willing to invest time and attention into technologies that appeal to them in some ways—hence the flashiness of a Christmas Tree.

Hey, look, if that’s what gets you in good glycemic control, you be you!

Once again, was that Medtronic trial deceptive? Well, they may have hidden information, but they also didn’t make any false claims. They simply reported the results from the two groups. They left it up to the reader to infer conclusions beyond what the company stated. And since most trials involving insulin pumps and closed-loop systems are paid for by those companies, it leaves this lingering impression that pumps really do benefit everyone.

To investigate this, I evaluated many similar articles published in medical literature, but also included articles that critique those trials, while also highlighting trials that were done well. I compiled this analysis an article called, “Benefits and Risks of Insulin Pumps and Closed-Loop Delivery Systems,” where my aims were more to draw attention to what well-conducted studies found versus conclusions drawn by poorly controlled studies (paid for and conducted by pump companies). By contrast, this article aims to deconstruct a single study to examine why the trial may be suspect.

Problematic trials and research may be concerning, but dealing with it is not as simple as it seems.

, a critical care physician and research faculty member at Mass General Hospital & Harvard, discussed this phenomenon, and responded to my question about why physicians can be drawn to believe poorly controlled trials. He pointed me to an article he wrote with his colleague Anupam B. Jena called, “The Art of Evidence-Based Medicine,” which they published in the Harvard Business Review. Here’s a relevant excerpt:“There is a critical misunderstanding of what information randomized trials provide us and how health care providers should respond to the important information that these trials contain. The successful application of evidence-based medicine is an art that requires first and foremost, an awareness of the evidence, and also an ability to determine how well the evidence applies to any given patient.”

All this naturally raises the most obvious question: If our own doctors are not seeing this bias, how can we T1Ds do our own research and not fall for bad data? I have four recommendations.

Research Tip #1: Be Wary of Corporate-funded Trials

As illustrated by the Medtronic trial, be skeptical of trials or studies that are funded by companies that have a financial interest in positive outcomes. Again, it doesn’t mean their data is bad, or their trials are flawed, or even that they are being intentionally misleading, though some are. It just means you have to be careful.

In fact, here’s an example of an article that specifically calls out a poorly designed trial and biasing results by a manufacture that published dubious data. The article that calls out this bad trial—and explains what to look for—is titled, “The Importance of Trial Design in Evaluating the Performance of Continuous Glucose Monitoring Systems: Details Matter,” published in Journal of Diabetes Science and Technology. Here, the authors eviscerate the authors of another trial that intended to compare the Freestyle Libre 3 (made by Abbott) to the Dexcom G7. The article that compared the two systems is titled, “Comparison of Point Accuracy Between Two Widely Used Continuous Glucose Monitoring Systems,” and meticulously calls out blatant errors and procedural matters associated with conducting clinical trials.

Notably—and unsurprisingly—the comparison trial was funded by Abbott, the maker of the Freestyle line of CGMs. The paper is an excellent exercise in seeing someone else rip into the details of someone else’s clinical trial.

It was somewhat poetic to note that the authors that critiqued Abbott’s trial were themselves employees of Dexcom, and they were only coming to their own defence. Fine, but I’m reminded of the old proverb, “He who lives in glass houses should not throw stones.” In this case, Dexcom’s own trial that got the G7 approved had issues as well, though nothing as egregious as the Abbott trial.

I wrote about Dexcom’s clinical trial and called out many concerns, but also pointed out that the purpose of Dexcom’s trial was very different than what Abbott did. Dexcom only intended to demonstrate that their G7 was “safe and effective,” not to demonstrate whether—or even if—T1Ds’ glucose control was improved.

This is the distinction from the Medtronic trial, which was aimed solely as a marketing tool. Their implication that it “improved” health outcomes for users is the goal, even though the actual trial does not necessarily establish that across a representative population of T1Ds.

That leads to another area of research: real-world vs. lab-based trials.

Research Tip #2: Differentiate Between Types of Trials

The Dexcom G7 trial I cited earlier only aimed to show that the sensor was able to detect glucose levels within a certain threshold from a reference monitor, which is an example of an efficacy trial, which is one that only demonstrates what a device (or drug or other intervention) can do under controlled conditions. By contrast, an effectiveness trial assesses how a given intervention performs under real-world conditions.

Dexcom has not performed an effectiveness trial–in fact, no one has, because the product is too new at the time of this writing.

Again, this is not a criticism of Dexcom, or the outcomes of the G7 trial, or the claims the company has made. However, people should not draw conclusions that the G7 actually helps improve glycemic control over the G6 or any other sensor because there have not been any effectiveness trials to prove this.

Unless you can prove it in a valid randomized effectiveness trial, such a claim cannot be made (with a straight face). And Dexcom knows this: Nowhere in any of their marketing materials do they state that the G7 improves glycemic control over the G6 or any other CGM. They only make the general statement that using CGMs has been proven to improve glycemic control over not using a CGM, which is true.

What would an effectiveness trial look like for the G7? I proposed an approach in my aforementioned article: Have a large group of users start by using a G6 for at least three months and gather their A1c levels and time-in-range (TIR) ratios as a baseline. Then, randomly assign half the cohort to a group that wears a G7 (the study group), while keeping the rest on the G6 as they were (the control group). Continue the trial for another three months. At the end, compare new A1c levels and TIR ratios against their baseline numbers when they were all wearing the G6. The G6 group should not see a change over the six months, whereas the G7 group may or may not see a change. If it’s better, worse, or no different, that would indicate the “effectiveness” of the G7 compared to the G6.

We can improve this trial further by extending the trial so that the G7 users go back to using the G6 again, and then have the control group (that always used the G6) use the G7. This is called a crossover trial because we’re having subjects move between interventions in order to see whether the effects of one intervention actually has lasting effect, or if it’s temporary (and if so, why).

For this hypothetical G6/G7 trial, if we were to make it a crossover trial, and if the outcomes of the various groups returned to baseline, we know that the differences between the sensors is clinically significant. If they don’t, then we know that the two sensors are probably too similar to matter in clinical (real world) settings.

This is part of what makes studying T1D so incredibly hard. There is so much unique and variable differences between individuals, with all sorts of confounding factors that can either amplify or mute certain interventions, that it’s hard to gain widespread knowledge about what works across the population.

Fortunately, there are other ways to conduct research besides direct interventional trials. One example is “retrospective studies,” which looks at historical data in the real world over periods of time, allowing for a clearer picture of health outcomes.

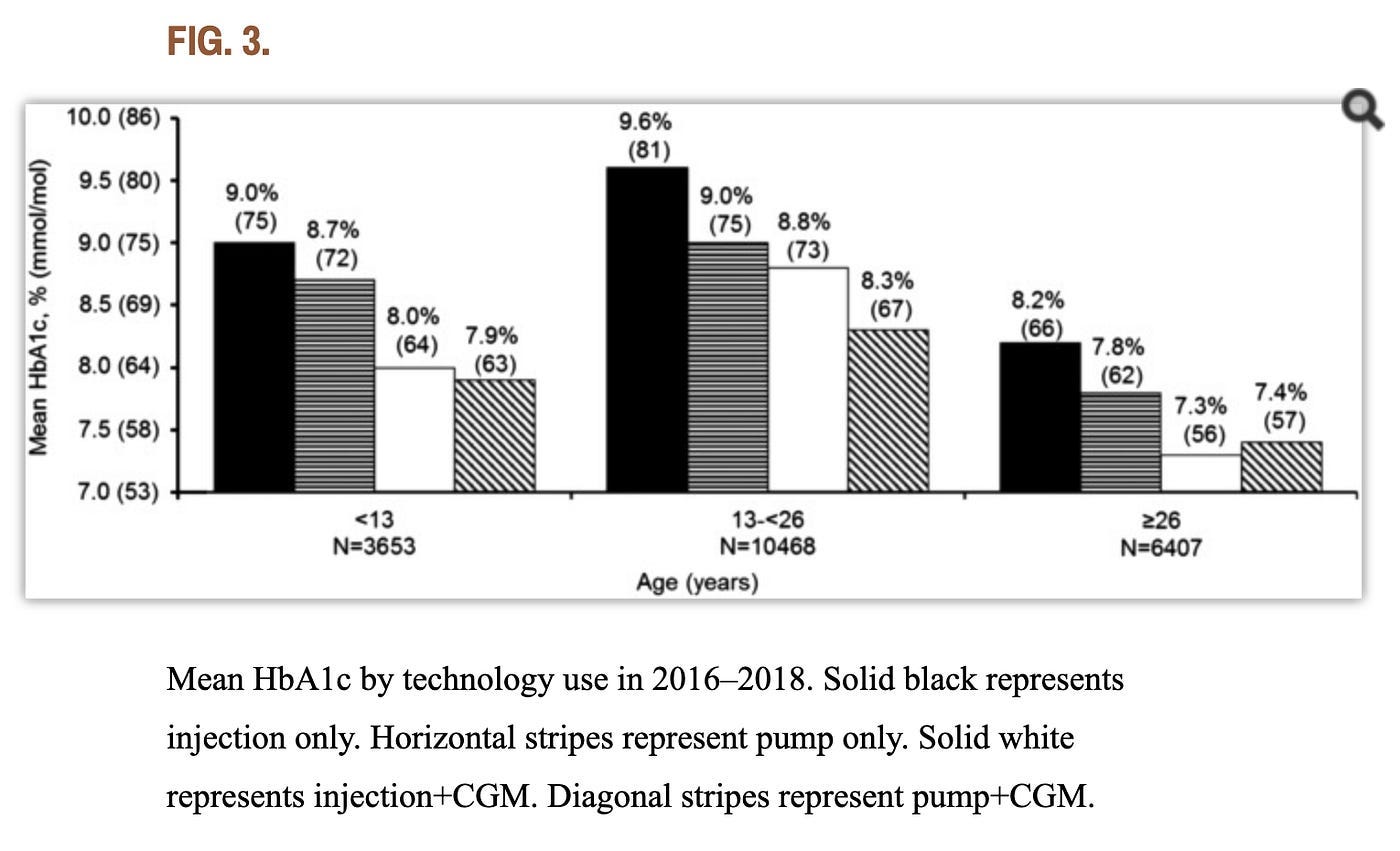

Since we’re talking about insulin pumps and CGMs, here’s a good example of a quality retrospective study: “State of Type 1 Diabetes Management and Outcomes from the T1D Exchange in 2016–2018.” The authors gathered three years’ worth of data from over 20,000 participants across all age groups. What’s more, they didn’t just compare just pump use versus MDI, they also compared CGM use and nonuse, the combination of which yielded four different test groups. A graph from the study’s findings is below. (Note that N=the number of participants in each group.)

As the graph shows, those who performed better with pumps over MDI were those who were under 26, which are mostly kids and teens, along with those who haven’t had diabetes long enough to master self-management techniques. For everyone else—adults over 26—the researchers’ analysis concluded that “there isn’t a substantial difference between A1c outcomes for those who manually take insulin injections compared to those who use pumps for those who use CGMs.”

Retrospective studies are perhaps the most revealing, but the problem is they take years, even decades, to gather enough data to reveal real-world outcomes. Great when you can get it, but it doesn’t help with evaluating newer technologies.

You can now use everything we’ve learned so far and start searching medical literature for all of it, but there’s another key research method you need: To find good sources of medical literature, which brings us to…

Research Tip #3: Use Google Scholar to Find “Literature Reviews”

This leads to my third recommendation: Use Google Scholar to search for things you’re curious about and read what independent researchers are finding, especially those who author “review papers” that cite a long list of studies and aggregate them together. This is called a “literature review” (or “meta-analysis” or “meta-review”).

To illustrate, below are the first few results that come from a google scholar search for “insulin pumps and rates of obesity.” One can just read the headlines and small text excerpts to get an idea of the kind of studies that have been conducted (some of which are review papers):

One only needs to read one (or a few) of these articles to understand that insulin pump therapy is either exacerbating obesity in T1Ds, or is contributing to cardiovascular disease. But, again, the devil is in the details. It’s not the pump itself that’s causing harm. Weight gain for T1Ds in general is due to basal rates being too high (which leads to lower glucose levels that require eating to compensate). And, this problem exists with MDI users as well as pump users. What the literature appears to suggest is that pump users tend to be less engaged with granular details in their daily management (unless they’re in a study), so they don’t pay as close attention to their basal dosing and regular bolusing as MDI users do. Because MDI users intentionally look at their glucose levels more often and make in-the-moment decisions, they are, by definition, more engaged than pump users.

Again, retrospective studies and independently run trials reveals this level of nuance that a corporate-controlled study will not.

Research Tip #4: Experiment on Yourself

My last recommendation for doing research is actually the simplest and easiest: Experiment on yourself. Like Dorothy, who eventually learned that the Wizard of Oz really didn’t have any superpowers, she also learned that she had the power to go back home all along—without anyone’s help. All she had to say was, “There’s no place like home.”

In a similar fashion, T1Ds should repeat the phrase, “There’s no diabetic like me.” You have always had the power to control your glucose, not anyone—or anything else. Sure, you can use things, but only your direct engagement with T1D will produce any meaningful health outcomes.

Start by doing good research, evaluate independent analysis, see what feels like it applies to you, and if it all seems to fit, try it yourself. What' you’re more likely to find is that the actual tools you use—a pump or an insulin pen—do not affect your glycemic control unless you choose to invest the time and attention necessary to achieve healthy outcomes. It may well be that your tools give you the motivation to be more compliant, but that’s a lifestyle choice, not a scientific choice.

In should be no surprise that this is the conclusion from the authors of a 2022 review of closed-loop systems in Diabetes Journal. In their article titled, “Diabetes Technology: Standards of Medical Care in Diabetes—2022,” the authors wrote:

“The most important component in all of these systems is the patient. [...] Simply having a device or application does not change outcomes unless the human being engages with it to create positive health benefits. [...] Expectations must be tempered by reality—we do not yet have technology that completely eliminates the self-care tasks necessary for treating diabetes.”

When you can do this type of research and self-analysis, you’ve completed the second stage of T1D self-management. Congrats.

Speaking of the three stages of T1D self-management, you may be wondering what the third stage is. Alas, it’s accepting the fact that there’s no Wizard of T1D that knows everything. The ecosystem of doctors, researchers, scientists and others all have something to contribute, but no single group has all the answers. Getting comfortable with that is when you are finally able to control your own agency, giving you greater confidence to act on your own.

To read more about these three stages, see “You’ve Got Type 1 Diabetes! Let the Fun and Agony Begin!”, and prepare to laugh.

Another great piece! I'm really curious on your thoughts on pumps and weight gain. It's a constant battle. I'm 49, A1c is 5.4%, total daily dose of about 45 units. I weigh 79.5 kg, so that doesn't seem like an "excessive" amount of insulin. Exercise a lot....yet cannot lose weight for the life of me. Part of it may be that with a 5.4 a1c, I do have lows, which result in some excess calories. I know that a slightly higher a1c/average glucose is fine...but psychologically it's tough. I think every T1D goes thru this battle. I'm curious if you have thoughts on your personal experience.

Thank you! As a type 1.5 diabetic diagnosed at age 54 13 years ago. I have been on traditional injections, pens, and closed loop Medtronic and Tandem pump/CGM systems. My ‘best’ A1C values were when I was using Lantus and Humalog pens and determining insulin dosage myself based on the amount and types of carbohydrates I was eating and sort of following my endocrinologist’s advice. You really have to educate yourself about this disease and become your own doctor. I hate to sound like a ranting psycho BUT in my opinion medical device companies and pharmaceutical companies are in the business to profit and make money off chronic diseases. They don’t seem to want a cure since that will stop the $$ coming in. The studies that are funded by medical device companies and the amount of $$ they pour in to colleges to train future doctors to diagnose and prescribe along their party line is a very good example - it’s so frustrating! So again thank you for speaking up and sharing your knowledge, I hope it inspires diabetics everywhere to empower and educate ourselves